Under the terms of the agreement, eligible travellers may enter Azerbaijan up to three times during the one-year period, with each stay lasting up to thirty days, providing significantly enhanced travel convenience and flexibility for HKSAR residents.

The HKSAR Immigration Department said the visa-free access is part of broader efforts under the Belt and Road Initiative to foster closer tourism, cultural and economic cooperation between Hong Kong and Azerbaijan.

The arrangement is expected to make short-term business, leisure and cultural trips more accessible, strengthening people-to-people ties in the region as well as facilitating deeper bilateral engagement.

The policy also underscores Azerbaijan’s expanding visa-free network; with the inclusion of this new arrangement, holders of HKSAR passports can now visit a total of approximately one hundred seventy-five countries and territories either without a visa or with visa-on-arrival privileges.

This development enhances the global mobility of Hong Kong travellers and reflects Azerbaijan’s ongoing commitment to facilitating international travel.

The Securities and Futures Commission (SFC), together with the Hong Kong Stock Exchange, has suspended the vetting of multiple listing applications that failed to meet regulatory standards and has asked major sponsors to undertake comprehensive reviews of their internal procedures to ensure compliance with listing requirements.

In an unusually firm move, the SFC and the exchange have instructed thirteen sponsor banks to examine how they prepare and oversee IPO filings, requiring them to submit detailed reviews within three months.

The vetting process for sixteen applications was halted after regulators found documents and submissions lacking in quality, clarity or adherence to established rules.

The wave of warning letters began in December, reflecting growing concern that the booming IPO market has stretched sponsors’ capacity and diluted quality oversight.

SFC Chief Executive Julia Leung emphasised that the sponsor’s gatekeeping role is “critical to maintaining the quality” of the capital market and investor confidence.

She warned that over-commitment by sponsors — particularly where principals are designated to work on six or more active applications simultaneously — undermines rigorous review and may attract restrictions on the number of applications they can handle.

Regulators also indicated that individuals involved in sponsor work will face tightened examination requirements after some banks were found to have allowed less experienced or ineligible staff to take on critical responsibilities.

The warnings come amid a record volume of listings in Hong Kong, which last year led global IPO fundraising, and a pipeline of applications that has put pressure on sponsors to balance quantity with quality.

Regulators have emphasised that maintaining high standards is essential to uphold the reputation of Hong Kong’s markets and protect investors, signaling that further enforcement actions or thematic inspections may follow if corrective measures are not implemented.

The announcement was made on January 30 at the 12th Annual Conference of Shenzhen-Hong Kong Qianhai Talents Cooperation, where authorities outlined plans to use the digital portal to match nearly two thousand full-time jobs and paid internships for young professionals from Hong Kong and Macao each year.

The platform will centralise opportunities in the region’s dynamic sectors, including finance, logistics and digital services, making it easier for aspirational workers to find employment across the boundary.

The Talent Connect system is intended to simplify cross-boundary employment procedures by linking with existing visa arrangements such as the Top Talent Pass Scheme and the Technology Talent Admission Scheme.

This linkage will allow qualified foreign nationals holding Hong Kong employment visas to transfer to short-term assignments in Qianhai without re-applying for separate mainland work permits, addressing bureaucratic barriers that have historically hampered mobility.

Complementing the online jobs portal, the Shenzhen-Hong Kong HR Alliance has been established by the Hong Kong Institute of Human Resource Management and the Qianhai Authority.

The alliance will work on mutual recognition of professional qualifications, common payroll standards and a shared compliance code to further reduce administrative friction for employees and employers navigating the cross-boundary talent landscape.

More than five hundred multinationals with operations on both sides have been invited to participate in working groups focused on talent mobility, tax equalisation and family-visa facilitation.

Officials say the programme will also alleviate practical deployment challenges for companies by offering a single window for work permissions, housing support and even schooling for accompanying dependents.

Shenzhen’s Qianhai authority has pledged to reserve subsidised housing units for participants in the scheme, though demand is expected to exceed supply in its first year.

Proponents argue that GBA Talent Connect underscores the region’s strategy of using collaborative platforms to expand its talent pool and strengthen its competitiveness amid intense regional competition for high-skilled professionals.

The regulation, which came into effect on January 25 and had required all seated passengers on franchised and non-franchised buses to wear seat belts where fitted, will not be enforced while the law is revised to better reflect the legislative intent.

Transport and Logistics Secretary Mable Chan acknowledged that the legal text contains “technical deficiencies” that prevented it from extending the statutory requirement for seat belt use across all bus seats as originally intended.

After consultations with the Department of Justice, the government decided to promptly repeal the relevant clauses by publishing subsidiary legislation in the Gazette, meaning there is currently no statutory obligation for bus passengers to buckle up under the amended provisions.

The initial regulation had also sparked public confusion and criticism, partly because a former lawmaker highlighted that the wording would only have applied to newly registered buses from January 25, contrary to some government statements suggesting wider applicability.

This discrepancy contributed to debates over enforcement and compliance.

Under the original rules, non-compliance with the seat belt requirement could have led to fines of up to HK$5,000 and a maximum three-month prison sentence, though these penalties will not be applied while the provisions are repealed.

The suspension applies specifically to seat belt mandates for bus passengers; existing seat belt requirements for other vehicles such as private cars, taxis and light buses will remain in effect.

Looking ahead, the government plans to gather views from stakeholders and revise the legal framework to ensure clarity and effectiveness before reintroducing compulsory seat belt measures for buses.

Public education campaigns encouraging voluntary seat belt use are expected to accompany this interim period, reinforcing safety awareness while legislative refinements are carried out.

The company, which has maintained a regional presence since 2010, says the planned Hong Kong location will enhance its ability to serve private banks, family offices, wealth intermediaries and institutional investors in one of the world’s fastest-growing wealth markets.

The proposed office, subject to regulatory approval and necessary registrations with local authorities, will complement the firm’s existing regional bases in Singapore, Tokyo and Sydney.

Federated Hermes’s leadership said establishing a physical presence in Hong Kong will enable more direct engagement with clients and partners, offering localized expertise and closer collaboration on sophisticated investment strategies and portfolio construction.

Jim Roland, head of distribution for the Asia-Pacific region at Federated Hermes, emphasised that Asia remains one of the fastest-growing wealth markets globally, with increasing demand for long-term investment solutions and tailored advisory services.

He noted that the move will position the firm to meet evolving client needs and capture strategic growth opportunities in both wealth management and institutional channels.

The expansion reflects Federated Hermes’s wider global growth ambitions, as assets under management continue to rise and the firm seeks to bolster its competitive position across key international markets.

Industry analysts have highlighted that the Hong Kong office will also serve as a strategic base for engaging with major financial institutions across the region, reinforcing the company’s long-term commitment to Asia-Pacific.

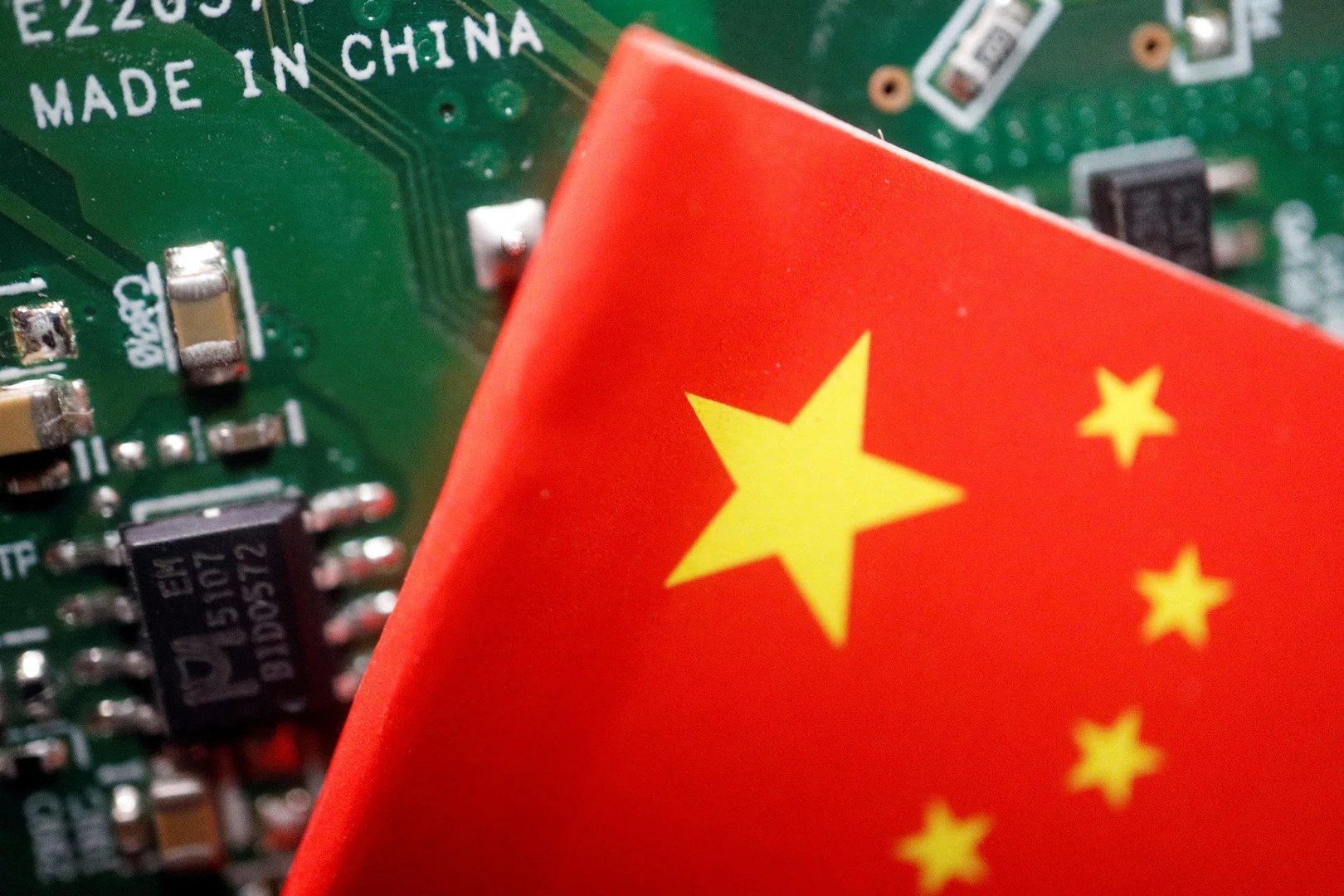

Chinese semiconductor designers Montage Technology and Axera Semiconductor have filed for initial public offerings on the Hong Kong Stock Exchange, marking significant moves by major Asian tech firms to tap global capital markets amid soaring demand for advanced chips.

Montage is seeking to raise up to HK$7.04 billion, equivalent to about US$902 million, through the sale of approximately 65.9 million shares priced up to HK$106.89 each, positioning its listing for February 9, 2026.

Axera, a specialist in artificial intelligence inference chips, plans to offer about 104.9 million shares at HK$28.20 apiece in a deal expected to raise around HK$2.96 billion, with listing set for February 10, 2026.

Montage, established in 2004 and based in Shanghai, designs interconnect and memory interface chips widely used in data centres and cloud computing systems.

The proceeds from its Hong Kong listing are expected to support research and development, expand commercial capabilities and pursue strategic investments.

Cornerstone investors such as J.P. Morgan Investment Management, Alibaba Group and other global funds have agreed to long-term allocations in the offering, underscoring confidence in the company’s technology and growth trajectory.

Axera, founded in 2019 and backed by investors including Qiming Venture Partners and Tencent, focuses on visual edge artificial intelligence inference system-on-chip products for real-time on-device applications such as smart cameras, industrial equipment and vehicles.

In its regulatory filing, Axera outlined plans to use IPO proceeds to enhance its technology platform, accelerate product development and broaden sales channels internationally.

While the company reported revenue growth in 2025, it also noted widening net losses, reflecting heavy investment in research and market expansion.

The dual filings come as part of a broader trend of Chinese semiconductor and AI firms turning to Hong Kong’s equity markets to secure funding amid global competition and geopolitical pressures affecting access to technology and capital.

Analysts say these listings highlight both investor appetite for next-generation chip technologies and China’s strategic push to build domestic capabilities.

The moves by Montage and Axera are poised to add momentum to Hong Kong’s IPO pipeline and broaden its role as a financing hub for high-growth technology companies.

The decision applies to the long-standing concession held by Panama Ports Company, a subsidiary of CK Hutchison Holdings, which has managed the Balboa and Cristóbal terminals for decades.

The court found that the original agreement and its later extension failed to comply with constitutional requirements governing public concessions and state oversight.

Judges cited deficiencies in approval procedures and contractual terms that were deemed incompatible with Panama’s legal framework.

The ruling follows an official audit that identified financial and administrative irregularities linked to the extension of the concession.

Panamanian authorities have said port operations will continue without disruption while transitional arrangements are put in place.

The government has indicated that a new, transparent bidding process will be launched to select future operators, reinforcing national control over infrastructure tied to one of the world’s most important shipping routes.

The decision has wider strategic significance, as control of canal-adjacent ports has drawn international attention given the canal’s role in global commerce and security.

Panama’s government reiterated that the canal and its surrounding facilities remain under full national sovereignty and that the ruling reflects domestic legal considerations rather than external pressure.

The Hong Kong firm has rejected the court’s findings and warned it may seek legal remedies, arguing that the concession was lawfully granted and has supported port development and employment.

Despite the dispute, Panamanian officials emphasised that continuity, legal certainty and the public interest will guide the next phase of port management.

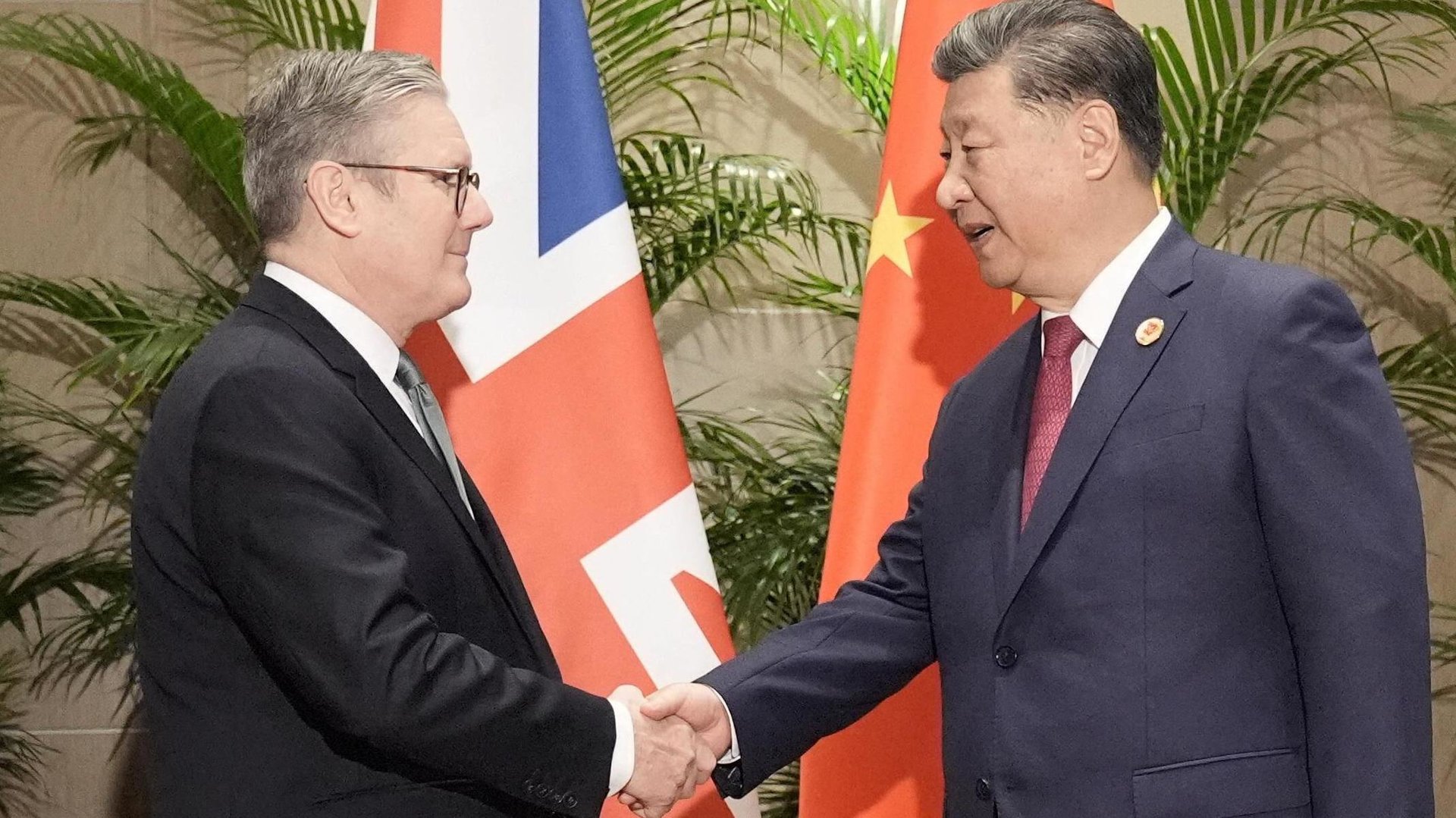

The investment, announced during British Prime Minister Sir Keir Starmer’s visit to Beijing, will focus on expanding medicines manufacturing, establishing research and development hubs and strengthening partnerships within China’s rapidly evolving life sciences ecosystem.

This commitment includes the previously announced two and a half billion dollar research hub in Beijing, as well as new manufacturing capacity across cities including Wuxi, Taizhou, Qingdao and additional sites that will serve both domestic and international markets.

AstraZeneca’s chief executive, Pascal Soriot, described the pledge as a “landmark investment” and the start of an “exciting next chapter” for the company in China, where it already operates multiple research centres and has deep collaboration with more than five hundred clinical hospitals.

At the same time, the company’s strategic pivot comes amid tensions with the UK government over drug pricing and industrial policy, which contributed to AstraZeneca pausing a planned two hundred million pound research expansion in Cambridge and abandoning a four hundred and fifty million pound project at its vaccine site in Speke near Liverpool.

Sir Keir Starmer welcomed the investment as beneficial for AstraZeneca’s growth and supportive of thousands of UK jobs, noting that partnerships between British universities and Chinese research institutions could further enhance the UK’s life sciences sector.

The plan is also set against a backdrop of robust global operations for AstraZeneca, which has recently pursued acquisitions including Gracell Biotechnologies to bolster its oncology capabilities and is concurrently expanding in other key markets including the United States.

While AstraZeneca faces regulatory inquiries in China relating to allegations of unpaid import taxes and other compliance matters, the company emphasised its commitment to the Chinese market as central to its innovation and manufacturing strategy.

By broadening its footprint in China, AstraZeneca aims to accelerate its development of breakthrough therapies including cell therapies and radioconjugates, while reinforcing international production networks that support global public health objectives.

The report, prepared by the bank’s Chief Investment Office and presented to clients in Hong Kong, underscores a preference for equities, continued confidence in artificial intelligence–led earnings growth and a comprehensive multi-asset approach to portfolio construction.

The bank projects that productivity gains from automation and data-driven decision-making will underpin corporate profitability and offset valuation pressures, dismissing the notion that current enthusiasm about artificial intelligence amounts to a speculative bubble.

Investors in the United States and Asia excluding Japan are expected to benefit from robust earnings growth, while near-term volatility is anticipated to remain a feature of market dynamics.

Standard Chartered’s outlook also highlights the appeal of emerging market bonds for income-seeking investors amid relatively subdued developed market yields and anticipates that easing United States government bond yields will provide attractive entry points for fixed-income positions.

Amid ongoing geopolitical tensions and shifting policy landscapes, gold and other alternative assets are cited as effective tools for managing portfolio correlation risks when traditional equity and bond relationships become less reliable.

Informed by these themes, the report’s local insights emphasise the importance of diversification across asset classes, sectors and regions to help investors navigate uncertain macroeconomic conditions and pursue both income and growth objectives in 2026.

Google’s search trends data indicate that queries related to the World Cup—including fixtures, teams, results and livestream information—are attracting exceptional volumes of attention in these markets.

The heightened interest reflects both the historic stature of the FIFA World Cup as the world’s premier international men’s football championship and the fervent enthusiasm among fans in Hong Kong and Taiwan as they follow preparations, schedules and commentary surrounding the next tournament.

The 2026 FIFA World Cup, set to be jointly hosted by Canada, Mexico and the United States with 48 teams and 104 matches across multiple cities, is already generating widespread online engagement.

Local online portals in Taiwan, for example, are reporting heavy traffic on pages providing comprehensive World Cup schedules, broadcast details and team lists in Mandarin for Taiwanese supporters, while Hong Kong sports news sites continue to carry extensive World Cup–related coverage in the context of wider regional football interest.

The prominence of World Cup–related search activity in both Hong Kong and Taiwan signals the event’s deep cultural resonance, uniting diverse audiences in anticipation of football’s most prestigious competition, scheduled to take place from June eleventh to July nineteenth, two thousand twenty-six.

The Hang Seng Index ended lower after earlier gains evaporated, reflecting a cautious shift in sentiment as traders opted to crystallize returns after recent strength in technology and growth stocks.

Markets throughout the region showed mixed performance in the wake of these developments, as broader macroeconomic signals and geopolitical considerations influenced risk appetite.

The pullback occurred even as recent data showed the Hang Seng Index had outperformed several international peers year-to-date, underscoring underlying resilience.

Analysts highlighted that such corrections often follow extended rallies, particularly when gains are concentrated in high-flying sectors that have led market advance.

Broader market indicators pointed to intermittent volatility, with sentiment influenced by upcoming central bank decisions and economic readings that may shape investor expectations.

Despite the short-term pullback, longer-term technical indicators suggest that the broader trend remains intact, with support levels holding and trading volume remaining robust as strategic investors evaluate opportunities amid price adjustments.

Market participants are watching whether renewed buying interest emerges at key support levels, which could signal a resumption of the positive trajectory that characterized recent sessions.

Montage, a Shanghai-listed firm specialising in chips that accelerate data flows in data centres and artificial intelligence systems, has lined up high-profile cornerstone investors including Alibaba Group Holding and JPMorgan Asset Management as early backers of the offering.

These cornerstone participants are expected to receive guaranteed allocations in exchange for holding shares for a defined period, helping to underpin demand and provide stability to the listing.

In addition to Alibaba and JPMorgan, other institutional investors such as Scotland-based Aberdeen Group, South Korea’s Mirae Asset Securities and UBS Group’s asset-management arm are reported to be participating as cornerstone investors, signalling broad institutional confidence in the transaction.

The stock, already traded on the Shanghai exchange, has gained sharply in recent periods on strong earnings performance and strategic positioning in memory interconnect and AI-related semiconductor markets, contributing to a valuation near twenty-two billion dollars.

Orders for the Hong Kong listing could begin imminently, with the company potentially listing its shares as early as this month, contingent on regulatory and market conditions.

Montage reported robust profitability, with 2024 net earnings of around one point four billion yuan (approximately one hundred ninety-six million U.S. dollars), and analysts forecast continued profit growth in coming years as global demand for cloud and AI infrastructure chips expands.

The deal comes during a period of heightened activity in Hong Kong’s equity capital markets, where chip and AI-linked firms have driven a surge in new issuance, attracting international investors and reinforcing the city’s standing as a premier venue for technology sector capital-raising.

Final details of the offering, including pricing and allotment, will be determined as institutional and retail bookbuilding progresses in the coming weeks.

In recent months, traffic through the exchange — supported heavily by Equinix’s International Business Exchange data centers — has climbed sharply as enterprises and cloud networks intensify their reliance on the region’s infrastructure.

Equinix facilities in Hong Kong host a substantial share of the exchange’s physical capacity and interconnection points, reflecting the company’s strategic position in the market.

This growth comes amid broader investments in digital infrastructure, including Equinix’s expansion with a new data center, HK6, designed to support advanced computing workloads.

These developments reinforce Hong Kong’s role as a gateway for digital traffic between China, Southeast Asia and the wider world.

The surge in exchange activity also reflects rising demand for low-latency connectivity among global cloud providers, financial institutions and technology firms seeking to leverage the region’s network-rich ecosystem.

With this sustained increase in utilization, Hong Kong’s Internet Exchange continues to strengthen its standing among Asia’s leading digital corridors as technology adoption and cloud deployments accelerate.

The bank’s leadership acknowledged that despite Hong Kong raising HK$286 billion in IPO proceeds last year, it lagged behind major competitors in securing lead sponsorship roles, highlighting talent gaps and the impact of earlier exits from parts of its investment banking operations in Europe and the United States.

This shortfall has prompted senior executives to reprioritise Asia’s largest financial centre as a core focus for growth in equity capital markets, aiming to strengthen the bank’s relevance and capture a greater share of future issuance.

Senior bankers at HSBC described the effort to rebuild market share as “maniacally focused,” with specific plans to bolster the firm’s Hong Kong investment banking division by hiring experienced Chinese bankers and deepening relationships with issuers planning listings on the Hong Kong Exchange.

The shift forms part of a broader pivot under Chief Executive Georges Elhedery, who has emphasised Asia and the Middle East as high-growth regions to offset slower activity in traditional Western markets.

Under this strategy, HSBC aims to elevate its position in equity capital markets league tables and compete more effectively with rivals such as Morgan Stanley, JPMorgan and China International Capital Corporation, which dominated the region’s IPO sponsorship roles last year.

The renewed focus comes in the context of a broader resurgence of Hong Kong’s capital markets after years of subdued issuance elsewhere.

With mainland Chinese firms and global issuers returning to list in the city, HSBC’s pivot underscores the bank’s intent to leverage robust market fundamentals and regulatory support that have helped Hong Kong reclaim its role as a leading global listing venue.

Bank executives have indicated that capturing a larger slice of this opportunity will require not only expanded human capital but also deeper engagement with institutional and corporate clients in the region, positioning HSBC to benefit from anticipated future IPO activity and the continued growth of Asia’s equity markets.

Microsoft saw its market value drop by roughly $360 billion after reporting a 66% increase in data centre costs tied to AI spending, a development that weighed on its share price even as revenue grew.

Apple moved to expand its artificial intelligence capabilities by acquiring an Israeli start‑up focused on facial expression analysis in a deal approaching $2 billion, while OpenAI is in discussions to raise up to $40 billion in fresh capital from major technology partners including Nvidia, Amazon, and Microsoft.

Meta Platforms reported record sales that lifted its share price by around 10% even as it outlined large capital expenditures for future growth, while Tesla announced the discontinuation of certain vehicle models as part of its pivot toward AI and robotics initiatives after posting its first annual revenue decline.

Uber committed $500 million to Canada’s Waabi to accelerate deployment of autonomous vehicles, signalling intensifying investment in self‑driving technology.

Workforce dynamics remain in flux as Amazon moved to cut another 16,000 corporate jobs, bringing total layoffs to 30,000 in recent months amid higher AI spending.

On the semiconductor front, ASML forecast strong sales driven by robust demand for AI‑related chips, underscoring continued capital expenditure in critical supply chains.

Regulatory landscapes are also evolving, with the UK competition authority targeting Google’s AI search overviews to loosen search dominance and encourage a fairer deal for publishers.

SoftBank is reportedly close to agreeing an additional $30 billion investment in OpenAI as AI capital commitments escalate steeply.

Key global names including Alibaba Group and JPMorgan Asset Management are expected to participate as cornerstone investors in the transaction, agreeing to take long-term positions in exchange for allocations that help underpin demand and pricing for the offering.

Other cornerstone commitments are said to include Aberdeen Group, Mirae Asset Securities and UBS Asset Management, drawing significant institutional support for what is shaping up to be one of the city’s most closely watched technology listings this year.

Montage, already listed on the Shanghai Star Market, specialises in designing chips that facilitate high-speed data flows in data centres and artificial intelligence accelerators, a segment that has seen strong growth and strategic importance amid intensifying global competition in advanced computing technologies.

The company’s share price has exhibited notable strength, rising sharply over the past year as investors embraced its positioning in memory interconnect and related semiconductor markets.

With a valuation estimated at around twenty-two billion dollars and profitability expected to expand over the coming years, the firm is looking to harness broader capital markets through a Hong Kong listing that would open access to a wider pool of international investors.

The planned offering, which involves the issuance of ordinary overseas shares, aligns with a broader resurgence of initial public offerings in the Asian financial hub, particularly among firms tied to artificial intelligence and cutting-edge technology.

Montage’s decision to pursue its flagship share sale in Hong Kong underscores the bourse’s appeal as a global fundraising centre and a gateway for Chinese technology firms to diversify capital sources.

As orders are expected to begin being taken from investors imminently, the level of demand and final pricing will offer early insight into market appetite for high-growth semiconductor names and the strength of Hong Kong’s equity capital markets in a period of renewed fundraising activity across the tech sector.

In Eastern Europe, the US president said Vladimir Putin agreed to suspend attacks on Ukraine’s capital Kyiv after a direct appeal, offering a temporary reduction in hostilities amid winter hardship.

Across North America, Canadian legislators sharply criticised the Alberta Prosperity Project for seeking help from the Trump administration, and in the United States federal officials signalled plans to scale back ICE activities in Minnesota contingent on local arrangements with prison access.

In the Caribbean, Cuban authorities warned that the island may have as little as fifteen to twenty days of oil remaining while crude exports to Havana have dwindled amid broader export blockades and pressure on regional suppliers.

Defence cooperation in Europe faces questions as Germany indicated that its joint fighter jet project with France may deliver fewer aircraft after Airbus’s chief executive said the company has stepped back from earlier expectations.

Economic policy headlines include research showing that proposed Trump-era tariffs could cause greater losses for Europe and the United Kingdom than for the United States.

Shifts in energy markets were also apparent as Russia’s oil revenue fell sharply, dropping by roughly one fifth in 2025 compared with the prior year, a reflection of sustained sanctions.

On currency markets and global finance, commentary from analysts challenged notions of imminent displacement of the US dollar’s dominant position, pointing to durable roles in key foreign exchange mechanisms.

Confirmed vs unclear: What we can confirm is that leaders discussed a pause in Moscow’s attacks on Kyiv and that Cuba’s oil supply is acutely low; what’s still unclear is the precise implementation timeline and practical effects of the ceasefire commitment and how quickly Cuba can restore energy imports under current constraints.

These developments underscore the interconnected challenges facing governments, from managing conflict and defence industrial programmes to balancing trade policy and energy security.

Amid these pressures, diplomatic engagement, economic coordination, and adaptive policy responses will shape outcomes over the coming months.

After several years of downward pressure, largely driven by high borrowing costs and subdued sentiment, official housing indices have recorded consecutive months of rising home values, with prices reaching levels not seen in over a year, reflecting returning confidence among local and mainland buyers.

The rebound has been supported by easing financing conditions as global interest rate expectations shift lower, making mortgage financing more affordable and encouraging owner-occupiers to re-enter the market.

Analysts now see the sustained recovery as more than a short-term bounce, underpinned by improving affordability and policy tailwinds designed to stabilise the sector.

Previous stamp duty reductions and initiatives to attract new residents, including relaxation of talent and student visa quotas, have contributed to increased demand, further boosting transaction momentum.

Transaction volumes have also risen after a prolonged slump, with primary and secondary sales showing marked growth compared with earlier in the downturn, suggesting pent-up demand is translating into actual purchases rather than speculative interest alone.

Industry forecasts point to continued price growth into 2026, with some institutions projecting gains of around five to ten per cent next year as markets fully absorb earlier policy shifts and capital cost relief.

A number of property consultants and financial firms highlight that the market is moving from a stabilisation phase into a broader upcycle, driven by both genuine housing needs and returning investor interest.

While challenges remain, including uneven demand across luxury and mass residential segments, the overall trajectory points to a housing market that is regaining momentum, contributing to renewed economic activity and urban vibrancy in Hong Kong as the city enters 2026 with firmer foundations and positive sentiment.

Microsoft lost about $360 billion in market value as AI spending unsettled investors, while Tesla scrapped certain models as it pivots toward AI amid its first annual revenue decline.

In geopolitics, the United States assembled a major military force posture aimed at Iran, while also preparing to nominate Kevin Warsh as the next Federal Reserve chair to replace Jay Powell.

Washington also moved to open Venezuela’s vast crude reserves to major oil firms and launched a lawsuit against the IRS and Treasury seeking at least $10 billion.

Elsewhere, Apple delivered a record $144 billion quarter powered by strong iPhone sales, HSBC positioned itself for more Hong Kong IPO activity, and EasyJet’s chief criticised proposed EU cabin bag rules.

Together, these developments reflect intersecting pressures reshaping global markets, regulation, trade competition, and strategic diplomacy in real time.

The framework treats tokenised securities as traditional securities wrapped in blockchain-based technology while ensuring they remain subject to existing legal obligations under Hong Kong’s Securities and Futures Ordinance, including licensing and conduct requirements for intermediaries.

The guidance requires firms to maintain rigorous investor protections and risk management controls while acknowledging the efficiency, transparency and cost benefits that digital tokens can introduce to the market.

Licensed entities engaging in tokenisation must demonstrate robust oversight of technology and ownership risks, retain responsibility even when outsourcing key functions, and provide comprehensive disclosure to clients about the nature of tokenised products.

The framework also sets out conditions for tokenising authorised investment products, including requirements for proper authorisation and safeguards tailored to blockchain-based structures.

These measures aim to foster innovation and growth in Hong Kong’s digital asset ecosystem while upholding market integrity and investor confidence.

The completed regulatory pathway marks a significant step in integrating blockchain technology with traditional financial products and supports Hong Kong’s ambitions as a leading global fintech hub.

The stock rose from about thirty-four seventy-six dollars at the prior close to open near thirty-six thirty-three dollars, a move that reflected positive sentiment among investors and technical strength following recent trading above key moving averages.

The uptick occurred on relatively modest trading volume but illustrated growing interest in the property developer’s outlook as its share price continues to track above both its fifty-day and two-hundred-day simple moving averages, widely watched indicators of short- and long-term trends.

Analysts and market watchers attribute the gap up to a combination of factors that have underpinned confidence in Hongkong Land’s medium-term performance.

The company, which owns and manages a portfolio of premier office towers, retail complexes and mixed-use developments across Asia’s major financial centres, has benefited from stabilising conditions in the Hong Kong and Singapore commercial property markets and improving leasing momentum in key assets.

As of the latest trading data, the stock is trading comfortably above technical support levels, suggesting that market participants are increasingly optimistic about a recovery in the region’s real estate sector and the company’s ability to deliver sustainable cash flows amid broader economic headwinds.

The recent pre-market gap up adds to a year of strong relative performance for Hongkong Land’s stock, which has nearly doubled over the past twelve months against volatility in global markets.

Investors have been closely watching fundamental developments, including leasing deals that signal strengthening demand for high-quality office space and a series of strategic portfolio adjustments by the company.

While trading volumes remain light compared with major equities, the price action highlights renewed speculative interest and technical momentum that could shape investor positioning as the broader market digests macroeconomic cues and sector-specific news through the opening weeks of the year.

Starmer met Chinese President Xi Jinping in discussions aimed at building a long-term strategic partnership, emphasising cooperation on trade, investment, climate change and global stability at a time of heightened international uncertainty.

Starmer described the relationship as being in a “good place” following the talks and underscored the need for a more “sophisticated” and consistent partnership between the United Kingdom and the world’s second-largest economy.

China’s president, for his part, highlighted the importance of strengthening ties to support peace and stability, urging both nations to “rise above differences” in their approach to global challenges.

The British delegation accompanying Starmer included more than fifty senior business leaders and representatives from cultural organisations, reflecting the emphasis on trade opportunities and market access.

A series of agreements and deliverables have emerged from the visit, including commitments to lower Chinese tariffs on Scotch whisky and a new thirty-day visa-free arrangement for British visitors to China.

High-profile business announcements were also made, such as a multi-billion-pound investment by AstraZeneca in Chinese manufacturing and research, illustrating the economic dimension of the mission.

Beyond commercial priorities, Starmer sought to balance economic engagement with national security considerations, asserting that the UK will protect its security interests while pursuing pragmatic cooperation.

This approach reflects London’s attempt to navigate complex ties with Beijing amid lingering tensions over issues including espionage concerns, human rights and China’s support for Russia in the Ukraine conflict.

Starmer’s visit also occurs amid broader strategic recalibration by Western governments, as allies reassess relations with China and pursue diversified trade and investment partnerships.

The trip follows a period of strained ties during which the UK’s previous government adopted measures to curb Chinese investment in sensitive infrastructure.

The Labour government’s current policy seeks a pragmatic engagement that supports British economic priorities while maintaining “guardrails” on security risks.

As Starmer continues his visit with engagements in Shanghai and further meetings with Chinese officials, the United Kingdom’s reset with Beijing underscores the complexity of balancing diplomatic outreach, economic opportunity and strategic caution in an evolving global landscape.

The ruling came from Seoul Central District Court, which found Kim guilty in a case centered on her acceptance of a luxury necklace from the Unification Church.

She was also fined 12.8 million won and the necklace was ordered confiscated.

Kim, the wife of former president Yoon Suk Yeol, had been held in custody since August during the investigation.

Her conviction follows a turbulent period in South Korean politics, with Yoon having been removed from office in April of the previous year after attempting to impose martial law in December 2024. He is now facing multiple trials, including charges of insurrection.

Prosecutors had requested a 15-year sentence and fines exceeding 2.9 billion won if Kim had been convicted on all charges.

However, the court acquitted her on separate counts involving stock-price manipulation and political funding law breaches.

The Unification Church has stated it did not expect anything in return for its gift, and its leader Han Hak-ja, also facing trial, denies intending to influence Kim.

Delivering the verdict, Judge Woo In-sung said that while Kim held no formal government authority, her symbolic role as first lady carried moral weight.

Her legal team responded by stating they would study the court’s decision before determining whether to appeal.

Outside the courtroom, supporters of the former first couple applauded after the acquittals were read, despite freezing temperatures.

What we can confirm is Kim’s bribery conviction and her 20-month prison sentence imposed by the Seoul Central District Court; what’s still unclear is whether her legal team will file an appeal on her behalf in the coming days.

The announcement underscores the centrality of the auto industry to Thailand’s economy, reflecting both the resilience of local manufacturing and the challenges posed by global competition.

TAIA president Suvachai Suphakanjandachakul said the 2026 output goal is an increase from around 1.455 million vehicles produced in 2025. The association forecasts that of the 1.5 million units in 2026, some 550,000 will be sold domestically while 950,000 units will be shipped abroad, keeping export volume steady with 2025 levels.

Exports remain a key driver of Thailand’s automotive base, which accounts for a significant share of industrial output and employment.

However, the industry’s leadership highlights ongoing headwinds in overseas markets, including intensifying competition from neighbouring producers such as China, which has expanded its presence in traditional Thai export markets like Australia.

Part of the external uncertainty revolves around evolving electric vehicle (EV) export incentives.

TAIA referenced provisions under an EV policy structure that allow certain export vehicles to be counted as more than one unit for incentive calculations and that extend specific export deadlines into the middle of 2026. What we can confirm is that industry leaders are engaging with these policy mechanisms; what’s still unclear is how final implementation and market reception will influence actual export performance.

Domestically, the industry is calling for sustained policy support after Thailand’s election cycle.

Suvachai noted that continuity in measures such as proposed scrappage-style schemes — which would encourage owners of older vehicles to trade in for new models — could help stimulate demand in a market where household debt and tighter lending conditions have constrained some buyer segments.

The Federation of Thai Industries reported that total vehicle production for 2025 was slightly above target, at approximately 1,455,569 units, though export production declined by over five percent from the previous year.

Domestic sales recorded notable improvement in late 2025, with December sales up significantly year-on-year, while certain segments like pickup trucks remained subdued.

Thailand’s automotive ecosystem continues to be a cornerstone of industrial strategy and export identity.

By setting a clear production target and advocating for consistent policy frameworks, industry leaders aim to reinforce confidence among investors, suppliers, and consumers.

Sustained focus on both domestic markets and global trade competitiveness will be essential as Thailand navigates its role in the future of mobility and manufacturing.

The emphasis on stable production goals and strategic policy alignment reflects a positive trajectory for Thailand’s industrial development and economic confidence as the country enters 2026 with ambition and clarity.

The move was outlined alongside annual results showing the company’s first year-on-year revenue decline, with Tesla describing a strategy increasingly centered on its Optimus humanoid robot, self-driving development, and a planned robotaxi business.

The company also disclosed a two billion dollar investment in xAI, the artificial-intelligence venture controlled by chief executive Elon Musk.

The decision links Tesla’s industrial ambitions to a separate AI platform effort and arrives as investors weigh the benefits of shared AI infrastructure against concerns about capital allocation and potential conflicts when a public company funds a business led by the same executive.

Tesla said the Model S and Model X lines will end in the second quarter of 2026, and that production capacity at its Fremont, California facility will be repurposed for Optimus robot manufacturing.

The Model S launched in 2012 and the Model X in 2015, but sales have become a smaller slice of Tesla’s overall deliveries as the Model 3 and Model Y dominated volume.

In its latest reporting, Tesla said total 2025 revenue fell about three percent to roughly ninety-four point eight billion dollars.

The company also posted its lowest annual profit since the pandemic, with net income reported at about three point eight billion dollars for 2025, down sharply from the prior year.

Quarterly performance highlighted the strain behind the annual picture.

Tesla reported fourth-quarter net income of about eight hundred forty million dollars, down around sixty-one percent year on year, while adjusted profit was reported at about one point eight billion dollars; revenue for the quarter was reported around twenty-four point nine billion dollars, and adjusted earnings were about fifty cents per share.

Tesla attributed key parts of the automotive slowdown to weaker vehicle sales, with fourth-quarter deliveries down about sixteen percent from a year earlier, and automotive revenue reported down about eleven percent over 2025. In the company’s smaller “other models” category, which includes Model S, Model X, and Cybertruck, deliveries were reported at about fifty thousand eight hundred fifty units for 2025, down roughly forty percent.

Confirmed vs unclear: What is confirmed is Tesla’s stated plan to end Model S and Model X production in the second quarter of 2026 and its two billion dollar investment in xAI, alongside a three percent decline in 2025 revenue; / What’s still unclear is the precise timeline and regulatory pathway for broad robotaxi deployment and the scale at which Optimus will be produced once Fremont capacity is retooled.

The company said it expects to spend about twenty billion dollars in capital expenditures in 2026, more than doubling its recent run-rate, as it builds out factories and compute for robotics and autonomy.

Tesla also reported that subscriptions to its full self-driving software reached about one point one million users, a figure it presented as evidence that software revenue can grow even when vehicle sales soften.

Supporters of the strategy argue that dedicating resources to Optimus, autonomy, and AI creates a path to higher-margin revenue streams that are less dependent on annual vehicle delivery growth.

Skeptics counter that discontinuing legacy halo models while committing large sums to adjacent AI ventures increases execution risk, especially as the company faces intensifying electric-vehicle competition and scrutiny over autonomous-driving claims.

Tesla said it is pressing ahead with plans for a dedicated “Cybercab” robotaxi concept and additional U.S. city rollouts tied to autonomy, while maintaining that its long-term identity is shifting toward what Musk has described as a “physical AI” company.

The near-term test is whether higher spending on robotics, AI, and autonomy can offset weaker automotive revenue and restore profit momentum without introducing new governance or regulatory constraints.

The group was convicted of multiple offences, including murder, kidnapping, fraud and running illegal gambling operations.

The case traces back to Lawkai’s transformation from a poor town into a lucrative illicit economy where several criminal clans operated.

The Ming network collapsed in 2023, when family members were arrested and sent back to China by ethnic armed groups that had taken control of Lawkai during clashes with the Myanmar military.

Authorities say the scams were conducted largely online and inflicted heavy financial losses, with many victims described as Chinese nationals who lost billions of dollars.

The fraud ecosystem also relied on coercion: the United Nations has said hundreds of thousands of people have been trafficked for scam operations in Myanmar and other Southeast Asian countries, with trafficked workers forced to participate in targeting victims.

Within the Lawkai landscape, the Ming family was described as one of the few clans with mafia-like control.

Their rise was linked to the early 2000s, after armed group leaders in the city were toppled during a military operation led by Min Aung Hlaing, who later became Myanmar’s military leader following the 2021 coup.

Ming Xuechang, identified as the head of the family, was described as running one of Lawkai’s most notorious scam centres, known as Tiger Villa.

The family’s income reportedly began with gambling and prostitution before shifting into online fraud operations, and accounts from freed workers described harsh conditions, tight security, and recurrent violence, including beatings and torture.

The executions were not the only sentences in the case.

More than 20 other members of the Ming family were sentenced to prison terms ranging from five years to life imprisonment for their roles in the criminal operations, including the trafficking and abuse of workers.

Myanmar’s military reported in 2023 that Ming Xuechang took his own life while attempting to escape arrest.

Separately, Chinese authorities have released confessions from some of those arrested as part of a state documentary presenting the crackdown as an effort to eliminate scam networks.

The broader architecture behind these schemes is not confined to one family or one town, with similar scam operations cited across parts of the region, including Myanmar, Cambodia, and Laos.

What happens next will be shaped by whether enforcement pressures dismantle remaining networks, how trafficking routes are disrupted, and whether additional prosecutions follow for other clans linked to the same cross-border scam economy.

The remarks came during direct talks with Xi, where Starmer presented the UK’s approach as one of structured engagement rather than simple confrontation or detachment.

While the precise policy commitments were not detailed publicly, the language suggests a bid to manage competition and cooperation in parallel.

Relations between the two countries have been strained by disputes spanning national security, technology, and geopolitical alignment.

Britain, like other Western states, has raised concerns in recent years about cyber activity, sensitive infrastructure, and the security implications of deep economic interdependence.

At the same time, China remains one of the world’s largest economies and a major trading partner, making a complete diplomatic freeze impractical for a UK government seeking growth and stable global market access.

Starmer’s emphasis on a more mature, “sophisticated” framework points to an attempt to rebuild channels of dialogue while maintaining safeguards.

The UK’s position has increasingly centred on a selective strategy: cooperating where interests overlap, such as trade and climate, while limiting exposure in areas viewed as strategically sensitive.

Beijing, for its part, has consistently argued for stronger economic ties and has rejected Western accusations of espionage or coercive practices.

Starmer’s outreach also comes as European governments reassess their China policies amid shifting global supply chains and rising strategic competition between Beijing and Washington.

For London, the challenge is to define an approach that is economically realistic without appearing politically or security-wise complacent.

The meeting underscores how both sides are trying to stabilise a relationship that is unlikely to return to the optimism of earlier decades, but also difficult to manage through distance alone.

The tone of “sophistication” suggests diplomacy built on clearer boundaries, narrower cooperation, and more explicit risk management.

What to watch next:

- Whether the UK outlines concrete areas for renewed economic or diplomatic engagement

- Any new guardrails announced around technology, security, or investment screening

- Beijing’s response and whether it offers reciprocal steps to ease tensions

- How the UK positions itself between US-China strategic rivalry

- Upcoming ministerial or trade-level follow-up meetings that test this reset

The run of shows at Kai Tak Stadium from January twenty-fourth to twenty-sixth marked the final stop of the tour, which began in July two thousand twenty-five and spanned Asia, North America and Europe, with thirty-three performances in sixteen cities.

Fans filled Hong Kong’s largest venue with overwhelming enthusiasm, creating a sea of light and energy as BLACKPINK opened their finale with high-octane hits including “Kill This Love” and “Pink Venom.” The group, celebrating their tenth anniversary since debut, delivered a rich setlist that also featured “How You Like That,” “Playing With Fire” and “Shut Down,” along with solo segments that showcased each member’s distinctive artistry.

The emotional resonance of the concerts was palpable, with members visibly moved during the closing moments and expressing gratitude for the support of their global fanbase, known as BLINKs.

The Hong Kong finale highlighted not only BLACKPINK’s sustained global appeal but also the scale of their achievements.

They became the first female K-pop act to headline stadium concerts in venues such as Wembley Stadium in the United Kingdom, with multiple sold-out dates across major markets, reaffirming their stature in the international music landscape.

The ENDLINE World Tour’s success was reflected in its record-breaking attendance and the overwhelmingly positive reception from diverse audiences.

Looking ahead, BLACKPINK has announced plans to release their third mini-album, DEADLINE, on February twenty-seventh, marking their first group musical project in over three years.

The new release has heightened anticipation among fans and industry observers alike, underscoring that the group’s creative momentum remains strong even as the tour concludes.

With promises of further activities and new music, BLACKPINK’s return to the studio and the stage signals an exciting next chapter for the group and their global community of supporters.

As the final curtain fell in Hong Kong, the air was filled with both celebration and anticipation, capturing a moment of reflection on a journey defined by artistic growth and an expansive international footprint.

The successful tour finale not only celebrated BLACKPINK’s past achievements but also set the stage for their evolving future.

The launch reflects growing demand from regional and international investors for diversified exposure to high-quality companies benefiting from structural growth in Asia’s major economies.

The new exchange-traded fund focuses on a curated basket of leading equities from China and key ASEAN markets, prioritising firms with strong fundamentals, established market positions and consistent performance records.

By combining exposure to mainland Chinese enterprises with select Southeast Asian companies, the product aims to capture long-term growth driven by consumption, technology adoption, infrastructure development and regional trade integration.

Market participants say the introduction of the fund highlights Hong Kong’s continued innovation in financial products and its strategic position as a gateway connecting global capital with Asian growth opportunities.

The exchange-traded fund structure offers investors transparency, liquidity and cost efficiency, making it an attractive option for both institutional and retail participants seeking broad regional exposure through a single listed vehicle.

The launch comes amid rising investor interest in Asia-focused strategies, particularly as China and ASEAN economies deepen economic ties and strengthen supply chain cooperation.

Policymakers and industry leaders have emphasised that such products support capital market development while reinforcing Hong Kong’s role in facilitating cross-border investment flows under established connectivity frameworks.

With the debut of this equity exchange-traded fund, Hong Kong further expands its range of Asia-centric investment tools, providing investors with a streamlined avenue to access premium regional assets and underscoring the city’s commitment to maintaining a dynamic and internationally competitive financial market.

After a prolonged downturn that saw housing prices slump sharply from their 2021 peaks, residential property values recorded sustained growth in recent months, lifted by robust demand from mainland buyers and stabilising market conditions.

This renewed interest has helped propel transaction volumes and set the stage for further price gains in 2026 as buyers seek safe haven assets and long-term holdings in the territory.

The city’s equity markets have also responded positively to cross-border investment flows, with the benchmark Hang Seng Index climbing to multi-year highs and trading volumes reflecting strong participation via the Hong Kong-Mainland Stock Connect channels.

Mainland investors have channelled unprecedented sums into Hong Kong equities, with net purchases reaching record levels that underscore sustained confidence in the city’s role as a global financial centre.

The inflows have contributed to a rally in both traditional blue-chip stocks and technology shares, supporting broader market resilience even as global economic conditions remain challenging.

Analysts attribute the surge in mainland capital to several factors, including ample liquidity within China’s domestic banking system, diversification strategies among institutional and private investors, and favourable policy linkages that strengthen financial connectivity between the mainland and Hong Kong.

Data from property agencies and market observers show that mainland buyers accounted for a substantial share of high-end residential transactions in 2025, pushing total purchase values to record highs and solidifying their influence on local pricing dynamics.

In parallel, Hong Kong’s housing price index recorded consecutive monthly increases, signalling a stabilising trend after years of correction.

Property specialists forecast that this momentum is likely to persist into 2026, with capital values tipped to rise further as demand from mainland and international buyers continues to gain traction.

The commercial property sector is similarly expected to benefit from renewed interest from mainland investors, who see value in office and accommodation assets amid evolving market conditions.

Market participants emphasise that the revival in both real estate and equity markets reflects a broader realignment of capital towards Hong Kong, where deep financial infrastructure, regulatory transparency and strategic positioning make it an attractive destination for diversified investment.

With cross-border connectivity measures and investor programmes facilitating greater flows of capital, the renewed mainland presence in Hong Kong markets is poised to support sustained growth and reinforce the city’s standing as a vibrant international financial hub.

The incident unfolded in the Sai Wan area when employees at the HSBC branch contacted authorities around mid-afternoon after the group presented an unusually large financial instrument that raised immediate suspicion.

Officers arrived at the scene shortly after the call and took two men and two women, all believed to be from mainland China, into custody on suspicion of using a false instrument.

Preliminary inquiries indicated that the cheque and accompanying documentation were likely counterfeit, and the case was transferred to a police station for further investigation.

Bank personnel had acted swiftly to challenge the authenticity of the documents before any transaction could be processed, averting a potential loss and triggering the law enforcement response.

Hong Kong’s financial sector has stringent anti-fraud protocols, and staff are trained to scrutinise instruments that deviate from normal business practices, especially those involving extraordinarily high nominal values.

Authorities have not yet disclosed detailed information about the motive behind the attempted transaction or whether the suspects are linked to other alleged financial crimes, but detectives are examining the origins of the documents and the roles of each individual taken into custody.

The four remain under investigation as police seek to determine whether the attempted encashment was part of a broader fraudulent scheme.

This episode underscores the vigilance of Hong Kong’s banking and law enforcement agencies in detecting and responding to sophisticated financial fraud attempts, reinforcing the territory’s reputation for robust financial oversight and protective measures within its global banking hub.

Police cautioned that investigations are ongoing and urged the public and financial institutions to remain alert to unusual financial activities while cooperation continues between law enforcement and banking regulators to trace the source of the suspect documents and to prevent similar incidents in the future.

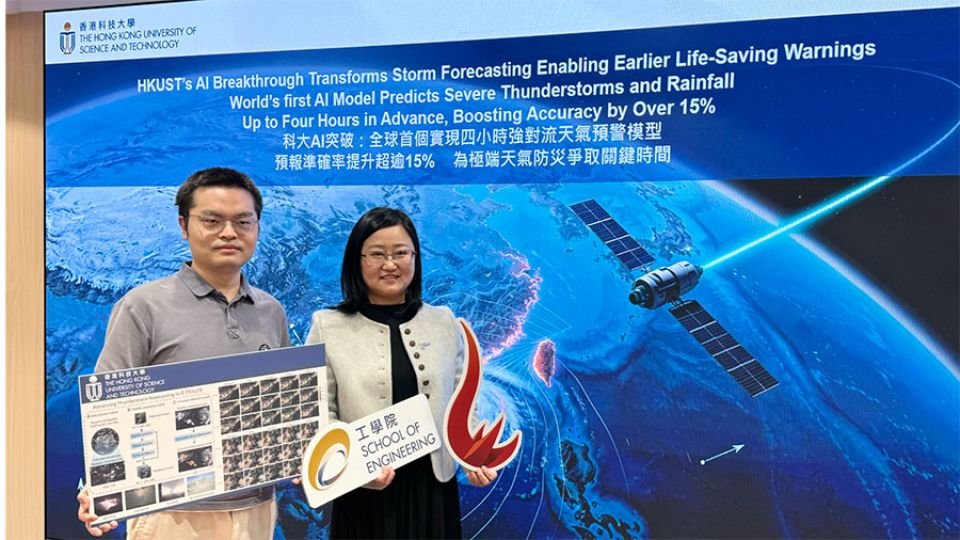

The innovation, unveiled at a press briefing in Hong Kong, represents a significant advancement in short-term storm prediction and has the potential to enhance preparedness and response by emergency services and communities across the region.

The model refreshes forecasts approximately every fifteen minutes and achieves an improvement in accuracy of more than fifteen per cent at a spatial scale of forty-eight kilometres.

The system, known as the Deep Diffusion Model based on Satellite Data, draws on generative artificial intelligence techniques and is trained on infrared measurements collected by China’s Fengyun-4 geostationary satellite from two thousand eighteen to two thousand twenty-one.

It has been validated using historical cases from two thousand twenty-two and two thousand twenty-three, demonstrating enhanced reliability in forecasting the evolution of convective cloud systems that give rise to intense rainfall and thunderstorms.

By capturing early signals of convective development from space, the model offers a longer predictive window than conventional radar- or ground station-based methods, which typically provide forecasts only minutes to a couple of hours ahead.

The project is a collaboration between scientists at the Hong Kong University of Science and Technology and national meteorological authorities.

Both the city’s Observatory and the China Meteorological Administration are evaluating the AI system for integration into operational forecasting frameworks.

Researchers emphasise that the extended forecast horizon could be invaluable for disaster preparedness and public safety, particularly as extreme weather events have grown more frequent and severe in recent years.

Hong Kong’s highest rainstorm warning was issued multiple times in the past year, underscoring the pressing need for improved early warning capabilities.

Beyond its immediate application in Hong Kong, the AI model’s framework covers a broad geographic region, offering high-frequency, high-resolution storm forecasts over tens of millions of square kilometres across East and Southeast Asia.

Its development reflects a broader trend in combining advanced artificial intelligence with meteorological expertise and satellite observation to strengthen regional climate resilience and community preparedness against extreme weather hazards.

The 19th edition of the forum, co-organised by the Hong Kong Special Administrative Region Government and the Hong Kong Trade Development Council, reaffirmed Hong Kong’s role as a premier international financial hub and a platform for global engagement.

Across numerous plenary sessions, roundtables and sectoral workshops, attendees explored emerging themes including macroeconomic trends, digital transformation, sustainable finance, investment innovation and the integration of finance with real economy activities.

Delegates from major economies underscored the importance of cross-border collaboration to address shifting global market dynamics and to unlock new growth opportunities.

The event’s deal-making segment delivered tangible outcomes, featuring structured matchmaking sessions that connected investors with high-potential projects.

These sessions facilitated hundreds of one-on-one meetings, reflecting strong interest in Asia’s startup ecosystem, technology ventures and green infrastructure initiatives.

Organisers highlighted that the deal-making component not only expands investment flows but also strengthens commercial linkages between regional and international partners.

Several keynote addresses and panel discussions examined the implications of financial innovation, including advances in artificial intelligence, fintech integration and evolving regulatory frameworks.

Senior policymakers reiterated commitments to fostering resilient and inclusive financial systems while reinforcing Hong Kong’s unique advantages under its established legal framework and strategic positioning within global markets.

Participants welcomed the forum as a signal of renewed momentum in international finance following recent global uncertainties.

Many expressed optimism that the connections forged and insights shared will translate into sustained partnerships and collaborative ventures.

The Asian Financial Forum concluded with calls to further harness collective expertise and capital to support long-term economic development across the region.

Organisers announced that the forum’s success underscores continued confidence in Hong Kong’s capacity to convene influential global networks and to shape the trajectory of finance and investment cooperation in the year ahead.

The system enables patients to verify their identity and complete clinic check-in by scanning their palm, removing the need for physical documents and substantially reducing waiting times at reception areas.

The technology combines palm print and palm vein recognition to deliver a high level of accuracy and security while remaining fast and contactless.

Patients who register in advance through Bupa’s digital health platform can use the service by simply holding their palm over a dedicated scanner upon arrival.

The solution is designed to function even when patients are wearing masks, supporting hygienic and seamless access to care.

The palm-based check-in service is being deployed initially at selected Bupa Hong Kong medical centres, with plans for wider rollout across its clinic network.

The initiative forms part of Bupa’s broader Connected Care strategy, which aims to integrate digital tools with in-person medical services to improve efficiency, accessibility, and overall patient satisfaction.

The collaboration leverages Tencent’s expertise in biometric and digital identity technologies, which have been increasingly adopted across Asia in sectors such as finance, transport, and public services.

By applying this technology to healthcare, the partners aim to reduce administrative friction, streamline patient flow, and allow medical staff to focus more fully on clinical care.

The launch reflects growing momentum in Hong Kong toward contactless and technology-driven healthcare solutions.

Early feedback indicates strong patient acceptance, with users highlighting the simplicity and speed of the process.

As adoption expands, the palm-based check-in system is expected to set a new benchmark for how digital identity tools can enhance everyday healthcare experiences in the city.

Official customs data released for December showed that import volumes dropped by roughly a quarter compared with the previous month, underscoring a shift in trade patterns as mainland buyers adjusted to market conditions and seasonality.

Despite the December downturn in Hong Kong-reported import figures, early January market activity indicated a resurgence in retail interest and rising dealer premiums.

Following a correction from the record high prices seen through much of 2025, bullion dealers reported that physical gold in China was trading at premiums over international benchmark spot levels, signalling stronger appetite among domestic buyers for bars and jewellery as the holiday and New Year period unfolded.

Retail demand in China and across parts of Asia was reported as relatively robust, even as elevated prices had earlier constrained some segments of the market.

Analysts said that the contrasting patterns at the turn of the year reflect both structural and seasonal dynamics.

The Hong Kong figures provide a transparent snapshot of one import channel but do not capture total mainland gold purchases, which also occur through Shanghai and Beijing hubs.

Meanwhile, dealer premiums — a key indicator of on-the-ground demand — have shifted from discount to modest positive levels in recent weeks, suggesting renewed confidence among physical buyers following price adjustments.

Market watchers pointed out that strong physical demand in early January often coincides with traditional buying periods and can be enhanced by expectations of future price trends.

Robust retail interest, particularly for bars and jewellery, has helped underpin local prices and contributed to the premium environment in key trading centres.

At the same time, global gold prices, after a period of volatility and correction from historic highs, continued to influence buying behaviour across Asia.

Overall, the latest data highlight a complex interplay between import flows, dealer pricing behaviour, and end-user demand.

While net imports via the Hong Kong route pulled back sharply in December, early evidence from the start of the new year points to heightened physical buying and premium strength that will be closely watched by market participants as 2026 unfolds.

After climbing more than 1,330 points or roughly five per cent in six consecutive trading days, market participants are anticipating some profit-taking pressure as valuations approach near-term resistance levels.

The expectation of consolidation comes against a backdrop of soft global cues for Asian equities, with mixed performances across European and U.S. markets tempering risk appetites.

The Hang Seng Index closed sharply higher in the latest session, buoyed by broad-based gains, particularly among technology and financial names, before retreat signals emerged.

Stocks such as Alibaba Group and China Life Insurance were among the notable performers during the rally, while other major constituents contributed to elevated trading activity.

Despite the recent strength, analysts and traders alike view the current price levels as ripe for short-term profit-booking, which could lead to some downward pressure or sideways trading as the market digests recent gains.

Investors are also balancing local developments with broader macroeconomic indicators, including geopolitical concerns and policy signals from major central banks, which have helped shape sentiment in Asia’s equity markets.

In past instances when Hong Kong equities rallied — such as during AI optimism or stimulus-led advances — brief pullbacks have followed as traders recalibrated positions.

This pattern suggests that the anticipated profit-taking may be part of a normal market cycle rather than a more serious downturn.

Market strategists will be watching key technical levels for the Hang Seng Index and sector rotations for clues on whether broader momentum can be sustained or if consolidation will dominate trading in the near term.

The benchmark Hang Seng Index dipped modestly from earlier peaks as traders rebalanced positions following a rally driven by strong earnings expectations and heightened interest in AI-related demand.

Short-term selling pressure near resistance levels contributed to the index’s retreat, underscoring caution among market participants.

Profit-taking was evident across key sectors, with the Hang Seng Tech Index also slipping as technology names cooled after recent advances.

Analysts said that profit-booking, particularly after extended gains in tech and AI-sensitive stocks, was a primary catalyst for the market’s pause.

Despite a positive earnings outlook from major semiconductor firms lifting broader sentiment earlier in the week, the recent pullback reflects investor reluctance to push valuations higher without clear near-term catalysts.

Market observers noted that the retreat was occurring against a backdrop of mixed signals from global markets, including cautious pricing of U.S. Federal Reserve rate-cut expectations and renewed scrutiny of AI valuations.

While upbeat forecasts for data-centre spending and artificial intelligence have underpinned optimism in recent sessions, some investors have grown wary of lofty valuations as profit-taking intensified.

Several mainland and Hong Kong technology stocks, which had contributed to earlier gains, saw modest declines as traders adjusted exposure.

Despite the near-term softness, the broader market retains underlying support from strong corporate earnings and continued interest in growth themes.

Analysts said that any weakness could present opportunities for disciplined investors, particularly if fundamental drivers such as robust technology demand and earnings resilience persist.

With sentiment sensitive to global rate and valuation dynamics, trading volumes and price action in the coming sessions will be closely watched for signs of renewed momentum or further consolidation.

Hong Kong Accelerates Bid to Become a Global Gold Trading Hub with Vault Expansion and Clearing Pact

Government officials, exchanges and major banks are advancing plans that include expanding gold storage infrastructure, establishing a central clearing system and deepening market linkages with mainland China and global investors.

A recent memorandum of understanding with the Shanghai Gold Exchange will create a cross-border clearing platform connecting Hong Kong’s market with China’s extensive bullion trading ecosystem, reinforcing the city’s strategic position in Asia’s gold sector.

This collaboration is designed to enhance liquidity, lower transaction costs and improve settlement efficiency for participants on both sides of the border.

Trial operations of Hong Kong’s own gold clearing system are planned for later this year, according to government sources, signalling tangible progress toward operationalising this infrastructure.

These initiatives coincide with strong global demand for gold, which has surged following heightened geopolitical tensions and investor interest in safe-haven assets.

Infrastructure expansion is central to Hong Kong’s strategy.

The territory’s leadership has pledged to increase gold storage capacity significantly over the next three years by expanding existing facilities and building new vaults, including major projects at Hong Kong International Airport.

China’s Industrial and Commercial Bank of China (ICBC) plans to open a precious metals depository at the airport, complementing the Shanghai Gold Exchange’s first offshore vault in the city.

These developments are intended to accommodate both institutional and private bullion holders and support physical trading and delivery services that are fundamental to a premier bullion hub.

The Hong Kong government has also outlined broader ambitions such as creating a central gold clearing system, supporting new investment vehicles for gold products, and encouraging refineries and trading firms to establish a presence locally.

Chief Executive John Lee has emphasised cooperation with mainland authorities to align regulatory frameworks and explore mutual market access, potentially enabling smoother cross-border transactions and yuan-denominated trading.

These steps are intended to position the city as a transparent and efficient gateway for global investors seeking access to Asia’s booming precious metals markets.

Despite optimism, challenges remain, including competition from established hubs such as London, Zurich and Singapore, as well as a noted shortage of specialised talent in bullion trading and refinement.

Authorities are addressing these gaps by attracting talent from overseas and supporting the development of local expertise.

Observers say that if Hong Kong can successfully build robust infrastructure, regulatory clarity, and international participation, it could shift some balance in global gold trading toward Asia, reflecting broader shifts in commodity demand and financial market dynamics.

The Singapore Central Private Real Estate Fund, which is expected to launch in the first quarter of 2026 with more than S$8 billion in assets under management, is seeded with prime commercial office properties in Singapore’s central business district, including Marina Bay Financial Centre Towers and One Raffles Quay.

Both APG and QIA have agreed to be cornerstone investors in a fund that aims to attract further institutional capital and professionalise long-term stewardship of high-quality gateway assets.

The fund reflects Hongkong Land’s broader strategic shift towards third-party capital and asset management after years of focusing on investment properties across Asia’s core markets.

By pooling institutional equity alongside its own seed portfolio, Hongkong Land seeks to create one of Singapore’s largest private real estate platforms, providing stable income generation through rental growth and occupancy resilience amid evolving market conditions.