Embassy officials described the claims as groundless smears, reiterating China’s opposition to all forms of hacking.

The dispute follows Singapore’s identification of the group—reported by Mandiant as China‑nexus—as actively probing energy, transport, and finance systems, prompting heightened cybersecurity alerts.

The collaboration leverages Qualtrics’ industry‑specific solutions to track satisfaction, loyalty, and service performance, enabling the airline to make data‑driven improvements and strengthen its competitive edge in the aviation sector.

The tariff’s exact start date hinges on a joint statement expected soon, after last week’s trade deal that marks one of the few concluded by the current U.S. administration ahead of the looming deadline.

Jakarta continues to negotiate details, including exemptions for key commodities such as palm oil, rubber and nickel.

Passengers leapt into the ocean to escape the blaze and in one case a two‑month‑old infant was rescued with water in the lungs and is now in stable condition following medical treatment.

Authorities, supported by the Indonesian Navy, Coast Guard, Army and local fishermen, continue search operations while the investigation into the fire’s cause remains ongoing.

Health authorities, concerned by rising youth dependence, unregulated sales and smuggling, have moved to classify cannabis flower as a controlled herb.

The policy shift, prompted by the withdrawal of the Bhumjaithai Party from the ruling coalition, has sent the once‑booming cannabis sector, valued at over $1 billion, into turmoil as businesses scramble to adapt to new compliance demands.

Police report that Henry had been drinking beer and smoking cannabis before the fall and that surveillance footage shows only Henry and his Ukrainian girlfriend entered their room.

Rescue teams pronounced him dead at the scene, while his girlfriend, hospitalized in shock, awaited recovery before being questioned.

Family members paid tribute, recalling plans for the couple to marry, as officials continue their investigation.

The government says it wants a fairer trade environment without sacrificing its green growth agenda or economic sovereignty.

Trade and Industry Minister Tengku Zafrul Abdul Aziz has emphasized that Malaysia will not yield on issues such as EV incentives or broader investment rules, even as talks intensify ahead of the August 1 tariff deadline.

Mixed performances from banking, plantation and telecom sectors reflected investor caution, while sentiment was lifted slightly as traders awaited further clarity on trade negotiations between Kuala Lumpur and Washington.

Authorities across the region recently dismantled several scam compounds, freeing over 7,000 victims who had been coerced into defrauding foreigners—the majority starving, jailed in overcrowded compounds, and deprived of medical care.

Survivors recount harrowing experiences of confinement, abuse, and relentless pressure to produce illicit profits for criminal syndicates operating across borders in Southeast Asia.

The incident, caused by a collapsing mine wall early Monday, has also engulfed nearby homes under debris and mining runoff.

Authorities and emergency crews continue search efforts amid concerns that more individuals may be trapped beneath the mud and rubble, highlighting ongoing safety risks in Myanmar’s lucrative jade mining sector.

The collapse, triggered early Monday morning, sent torrents of mud that engulfed both worksites and nearby settlements.

Police and rescue crews have recovered twelve bodies thus far and continue intensive search operations despite difficult terrain and unstable ground.

This decision is part of one of the largest stock manipulation cases in the country.

In a detailed verdict delivered over four hours on Sunday, the court mandated that the Anti-Money Laundering Office (AMLO) use 5.376 billion baht—generated from the sale of MORE and MORE-R shares on November 10, 2022—as compensation for the affected brokerage firms.

These firms had previously advanced funds for the transactions but had not received reimbursement.

Additionally, the ruling included the seizure of the 1.5 billion shares involved, which are now classified as state property.

The legal proceedings followed an investigation into the alleged manipulation of MORE shares, leading the Securities and Exchange Commission (SEC) to file a complaint on June 29, 2023.

The complaint targeted 32 individuals linked to the scheme.

Among those accused is Apimook Bamrungwong, who is alleged to have colluded in manipulating the prices of MORE and MORE-R shares between July 19 and November 10, 2022.

Reports indicate that Apimook utilized shares already in his possession as collateral for margin trading with the 11 brokerage firms.

This tactic allowed him to execute orders for 1.5 billion MORE and MORE-R shares at the market’s opening on November 11, 2022, at a price of 2.9 baht per share.

The case has drawn significant attention within Thailand’s financial sector, raising concerns regarding regulatory oversight and the integrity of trading practices.

The Provincial Police Bureau 3 has raised security to the highest level across Surin, Buri Ram, Si Sa Ket and Ubon Ratchathani provinces following reports of unusual activity along the Thai–Cambodian border.

On Monday, Pol Lt Gen Watana Yijean, who oversees the bureau, inspected operations in Phanom Dong Rak district, where concerns centred on the area around the Prasat Ta Muen Thom archaeological site. The precise nature of the activity has not been disclosed.

Authorities have also alleged that Cambodia dispatched as many as twenty‑three buses carrying Cambodian nationals to the site, leading to tensions with Thai troops stationed at the temple.

Security enhancements include:

- Permanent 24‑hour checkpoints in forested border regions

- Installation of surveillance cameras and drone units linked to command centres

- A forward command centre in Phanom Dong Rak under Pol Maj Gen Prasong Ruangdej

- Joint patrols involving the Provincial Administration Department and volunteer defenders, covering villages, schools, hospitals and government buildings

- Evacuation protocols for local communities in case of escalation

Watana confirmed that vital facilities—such as the Phanom Dong Rak hospital, neighbouring schools and refugee centres—have been prioritised for protection. In addition, one company of officers has been deployed by police headquarters in both Surin and Buri Ram to reinforce the region.

Previous incidents at the site include a February incident when Cambodian soldiers sang their national anthem at the temple, prompting formal protests from Thai military authorities. The disputed temple complex remains one of several contested areas straddling the Thai–Cambodian border. Military crossings and checkpoints have also seen increased monitoring, with some crossings temporarily closed following related incidents.

The elevated security measures come amid broader efforts by Thai police and defence forces to reinforce surveillance and border control in eastern provinces, including Chanthaburi, Trat and Sa Kaeo, in response to ongoing tensions and cross‑border provocations.

According to an analysis by the Siam Commercial Bank Economic Intelligence Centre (SCB EIC), the country’s economic landscape is shifting from being a producer to serving primarily as a transit hub within global supply chains.

Research indicates that Thailand's imports have been accelerating since 2020, fueled by increasing dependency on Chinese production inputs across critical sectors.

From 2020 to 2024, the annual growth rate of the country's imported goods averaged 10%, outpacing both GDP growth and export values.

By 2024, the proportion of imports to GDP is projected to reach 53%, marking the highest level in 12 years.

This surge in imports has resulted in Thailand facing a trade deficit for the third consecutive year.

China has emerged as Thailand's principal trading partner, accounting for over 25% of total import value.

This shift is largely attributed to the integration of Thai industries such as steel, plastics, and automotive into Chinese production chains.

Moreover, Thailand has become a key market for China’s surplus goods.

A growing trend toward online shopping and the prominence of businesses relying heavily on imported raw materials contribute to an increased influx of goods from China.

The acceleration in imports is driven by three primary factors: the outflow of surplus goods from China due to its slowing economy, the proliferation of cross-border e-commerce platforms appealing to Thai consumers, and the establishment of businesses with high import content across various sectors, including construction, restaurants, services, and manufacturing.

Specifically, SCB EIC's findings reveal that domestic consumption and Thailand's export sector are becoming increasingly dependent on imported goods, particularly from China, which poses challenges for the recovery of the industrial sector.

Producers of steel, circuit boards, electrical appliances, vehicles, and their components report diminishing reliance on domestically produced items.

The analysis suggests that a notable fraction of nearly 3,000 manufacturing firms may be functioning primarily as 'buy-and-sell' entities, often misrepresenting the origin of their goods.

Industries such as circuit boards, electronics, automotive parts, plastics, aluminum, and electrical appliances are specifically mentioned.

As these trends progress, the risks intensify for Thai exporters, who may encounter stricter trade barriers.

This evolving situation could gradually position Thailand as a buyer and transit country in global supply chains, potentially leading to a significant decline in domestic manufacturing activity.

In response to these challenges, government interventions may become crucial in safeguarding the competitiveness of Thai industries.

Protective regulations, promotional policies for local production, and careful oversight of foreign investments are necessary to fortify the economy against the pressures of a model increasingly reliant on 'buy-and-sell' operations, which could ultimately undermine domestic value creation.

Between January 1 and July 9, 2025, the CCIB recorded 175,477 cases—an average of 919 daily—resulting in estimated financial losses of 14.87 billion baht; just 2 percent (≈295.8 million baht) of those assets were recovered .

Authorities report that 56 percent of the scams involved online purchase fraud, 26 percent were money‑transfer scams, 7 percent were loan frauds, with the remainder encompassing various deceit methods.

More than 80 percent of victims were traced to networks operating from Poipet, Cambodia—not from Myanmar, as previously suggested .

On July 8, raids were conducted across 19 locations in Bangkok, Samut Prakan and Chonburi.

A residence in Bang Phli district was searched under a warrant targeting Cambodian casino magnate Kok An, owner of the Crown Casino Resort complex in Poipet.

Seized assets included multiple luxury vehicles and approximately 27 million baht in cash .

The operations focused on dismantling mule‑account networks and mapping financial pipelines tied to cross‑border fraud activities .

Kok An, 71, a senator in Cambodia’s ruling party and a prominent figure in the Poipet casino sector, controls several high‑rise properties, including 25‑ and 18‑storey towers and related entertainment venues suspected of housing call‑centre fraud operations .

He is wanted on charges of involvement in a transnational criminal organisation and money‑laundering.

Thailand has secured a court warrant and is collaborating with Interpol on a red notice.

Domestic law enforcement is coordinating with the Anti‑Money Laundering Office and the Attorney‑General’s office to pursue international prosecution .

Thai authorities indicate that the syndicate employed Thai nationals coerced into operating mule accounts and working in scam call‑centres located within Kok An’s Cambodian properties .

At least one Thai national is reported to have died in Poipet in February, reportedly under pressure linked to call‑centre operations .

The crackdown comes amid increasing diplomatic tension between Thailand and Cambodia.

It follows public statements by Thai political figures referencing deteriorated relations and alleged involvement of cross‑border elites in criminal networks.

Cambodia’s Senate leadership has condemned the actions as politically motivated and urged scrutiny of the measures .

Officials note that more stringent Thai border controls—restricted checkpoint hours and enhanced screenings—have coincided with lower monthly cybercrime complaints, from around 1,300 to below 900 per day in early June.

Closure of certain border crossings and restrictions on Thai nationals working in Cambodia are cited as contributing factors .

The CCIB has also pointed to earlier efforts: between March 2022 and March 2025, authorities processed over 1.18 million cybercrime cases and suspended more than 520,000 mule accounts, preventing losses estimated at 19.9 billion baht .

In parallel, the bureau dismantled major online gambling networks—including Bangkok operations handling over 11 billion baht in annual transactions—and blocked thousands of associated web URLs .

The ongoing operation aims to dismantle infrastructure allegedly used for hybrid fraud schemes, money‑laundering—sometimes involving cryptocurrency—and mule‑account systems.

Police have emphasised continued efforts to trace assets and prosecute involved individuals, both domestically and via international cooperation.

The heavy rainfall has resulted in extensive damage across various regions, with concerns that the death toll could rise as emergency response teams continue their efforts to locate missing individuals.

Rescue operations are particularly focused on areas that were heavily impacted, including those near the capital Seoul and further south in regions such as Son County.

Reports indicate that significant infrastructural damage has occurred, with thousands of roads and buildings affected.

The worst of the disasters has been reported in the county of San Chong, where the torrential rains have been characterized by meteorologists as a one-in-200-years event.

The extreme weather has led to widespread devastation in rural communities, where residents are struggling to protect livestock and property amid rising waters.

Survivors of the flooding have described harrowing experiences as water levels surged rapidly.

Eyewitness accounts reveal the difficulties faced by those caught in the floods, with reports of individuals trapped in vehicles and others needing assistance to escape from flooded campsites.

Rescuers have employed methods including zip lines and helicopters to reach stranded individuals.

As of the latest updates, 11 people remain unaccounted for, and search efforts are ongoing as rescue workers comb through debris and impacted areas.

The flooding has disrupted the peak summer vacation season in South Korea, as many individuals had sought refuge in mountainous areas to escape the oppressive heat that often exceeds high 30 degrees Celsius.

In Son County, ten fatalities have been confirmed, with four individuals still reported missing after landslides struck the mountainous villages.

Approximately 80% of South Korea is mountainous terrain, raising the risk of landslides during heavy rain events.

The monsoon rains, commonly referred to in Korea as 'summer guests', are observed regularly during the season but have become increasingly unpredictable as global climate patterns evolve.

The continued focus in the affected regions remains on rescue operations, with hopes of locating any missing individuals as communities begin to assess the extent of the damage.

Having grown up in Japan with his Japanese mother, Yamamoto believed for nearly six decades that his American father had abandoned him.

The revelation came as a surprise, as he had suffered bullying during his childhood, with peers referring to him as "gaijin," meaning "foreigner," and telling him to "go back to your country."

Yamamoto's childhood memories were marred by loneliness and confusion as he lacked information about his father, knowing only that he was American.

"I had no way to search for him.

There were no clues, no information, nothing," he explained in an interview.

Over time, his relationship with his mother deteriorated, leading to estrangement for more than 30 years after she remarried and had another child.

On the other side of the Pacific Ocean in California, Sharon Lovell grew up in a nurturing home but witnessed her father, John Vieira, mourn the loss of a son he had left behind in Japan during the 1950s when he was stationed there as a soldier.

"I saw my dad cry so many times.

Most of the time, I knew it was because of that," said Lovell, now 71.

According to her, Vieira had fallen in love with a Japanese woman and they intended to marry; however, he was sent back to the United States before the child was born.

The woman informed him that the child had been put up for adoption and was a daughter.

Vieira searched for the child for years but never succeeded in finding her.

He passed away in 2003, unaware that he had a son, Akihiko Yamamoto.

In 2022, through a sequence of DNA tests on the genealogy platform MyHeritage, the connection was unveiled.

Yamamoto's daughter in Japan and Lovell's cousin in California each submitted their DNA.

When a genetic link was identified between the two, they began exploring their connection, ultimately discovering that Yamamoto is the missing son of John Vieira.

The decision, announced by the Federal Office of Public Health, is rooted in growing evidence that questions the accuracy and effectiveness of conventional mammography.

Data presented to justify the ban highlights that mammograms can result in a high rate of false positives, with estimates suggesting that up to 60% of women who undergo screening may receive incorrect positive results that can lead to unnecessary stress, invasive procedures, and even overtreatment.

Moreover, research has indicated that the radiation exposure linked to mammography, though deemed minimal, poses potential risks that could outweigh benefits, particularly for certain demographics.

The ban follows a series of studies in recent years that have raised questions about the optimal age for women to begin routine screenings, with some suggesting that earlier mammography may lead to overdiagnosis and overtreatment.

In light of these findings, Switzerland's health authorities are advocating for a reassessment of breast cancer screening methodologies, emphasizing the need for alternative, less invasive screening options that could reduce harm while maintaining detection efficacy.

This policy shift is in alignment with a global movement towards re-evaluating traditional cancer screening programs.

Various countries have adopted different approaches based on local health statistics and risks.

In the U.S., for example, new guidelines from the American Cancer Society advocate for personalized assessment rather than standardized annual mammograms for all women starting at age 40.

Opponents of the ban have expressed concerns that discontinuing mammograms could lead to a decline in early breast cancer detection rates.

They argue that while false positives are a concern, the benefits of regular screenings in identifying cancers early, potentially saving lives, should not be overlooked.

Proponents of the ban, however, insist that the focus should shift to more advanced and non-invasive imaging techniques and genetic screenings that promise greater accuracy without the associated risks of mammography.

Gil Duran warned: “Too much wealth creates insanity… democracy is not the preferred operating system for the world.”

And yet, it remains the only system built on consent, accountability, and freedom—until or unless citizens rise to reclaim it. The urgent question is no longer whether Big Tech can shape elections. It is whether democracy can survive them.

And unless people claim back their freedom—at all cost and by all means—it won’t. What replaces it may not be kings or generals, but algo-crats and technocrats—a new totalitarianism in the language of code and the illusion of choice.

1. Introduction: From Platforms to Powerbrokers

What if political parties were no longer the true engines of democracy? What if they had already been replaced by digital platforms, billionaire-owned AI ecosystems, and algorithmic manipulation machines? In today’s digital battlefield, Big Tech no longer merely hosts political discourse—it engineers it. With control over mass data, attention algorithms, ad networks, and emotional engagement loops, companies like X (formerly Twitter), Facebook, and Google have quietly usurped the role of political gatekeepers.

2. Digital Platforms as Political Machines

As the Financial Times observes, Elon Musk doesn’t need to launch a formal “America Party.” With X, he already possesses a high-velocity political machine capable of mobilizing millions. Through targeted messaging, amplification mechanics, and data-fueled narrative control, Musk’s America PAC has leveraged hundreds of millions in campaign funding, social advertising, and AI-generated content—such as deepfakes—to influence swing-state outcomes.

This is not science fiction. It is Silicon Valley’s reality. In 2015, Airbnb pioneered this playbook in San Francisco, defeating Proposition F by mobilizing “Airbnb voters” using proprietary data. The platform-as-party model was born.

3. Tech Billionaires & Corporate Authoritarianism

A growing faction within Silicon Valley espouses techno-authoritarianism—the belief that tech-enabled “network states” should supersede democracy. According to The Verge and Politico, figures like Peter Thiel and Marc Andreessen envision societies governed by corporate logic, centralized surveillance, and algorithmic enforcement.

Content moderation becomes fiat. One executive decision to remove fact-checks or promote conspiracies can recode political reality overnight. These decisions are often made unilaterally, beyond democratic oversight.

4. Data + Algorithms = Targeted Influence

Big Tech excels at converting behavioral data into political power. From Facebook to TikTok, platforms deploy algorithmic micro-targeting to serve political ads shaped to exploit emotional triggers, often without transparency or user consent.

Research from arXiv and Stanford confirms that bots and algorithmic amplifiers spread disinformation faster than human users can correct it. In 2016, Russia’s Internet Research Agency deployed botnets across Twitter, triggering disproportionate retweets among conservative users—31 times higher than liberal accounts.

5. Undermining Democratic Institutions

Surveillance capitalism thrives on erosion of privacy. In harvesting granular personal data, tech platforms arm governments and private players with tools for manipulation. The New York Post and Universiteit Leiden have documented how these insights are weaponized for election engineering.

Simultaneously, Big Tech has captured regulators through intense lobbying. Harvard’s Kennedy School outlines how legislative processes are being bent by platform influence, stalling meaningful oversight.

6. Destabilization & Digital Authoritarianism

Platforms don’t just reflect political chaos—they manufacture it. According to Tech Policy Press, some elites view destabilization as an asset: an opportunity to erode institutions and usher in centralized alternatives.

China’s export of techno-authoritarian models—combining AI surveillance, biometric tracking, and algorithmic propaganda—has found resonance in other parts of the world. The line between democratic society and controlled information ecosystems is blurring.

7. Case Studies: Democracy on the Brink

Cambridge Analytica (2016–2018): Harvested 87 million Facebook profiles to psychographically target U.S. and Brexit voters.

Romania (2024): TikTok manipulation, allegedly Russian-backed, led to annulment of national elections after AI-driven disinformation campaigns.

France (2025): X faces criminal investigation over algorithmic favoritism toward far-right content.

Argentina, Bangladesh, India, Canada (2023–2025): Deepfakes, AI voice clones, and resurrected political figures used to distort elections.

8. Decline of Democratic Trust

The Journal of Democracy warns that trust in democratic institutions is collapsing under the weight of manipulated perception. Stanford’s Andrew Hall explains, “Even the belief that misinformation is out there can discredit an entire election.”

As AI-generated content becomes indistinguishable from authentic speech, epistemic trust is collapsing. The very idea of objective truth is under siege.

9. Future Risks & Countermeasures

Emerging threats:

AI-driven misinformation swarms

Zero-click misinformation via chatbots

Algorithmic monocultures that entrench ideological bubbles

Tech-run microstates

Expert recommendations:

Transparency: Mandatory disclosure for political ad targeting and algorithmic curation.

Regulation: Antitrust enforcement and algorithmic audits.

Oversight: Creation of independent digital governance bodies and international AI monitoring agencies.

Resilience: Massive investment in civic media literacy and digital rights education.

The typhoon, expected to bring powerful winds and torrential rainfall, poses significant flash‑flood and landslide risks in both mountainous and urban areas.

Defense and civilian agencies are mobilizing resources, securing vessels and reinforcing infrastructure to mitigate impacts set to begin late Tuesday.

:contentReference[oaicite:1]{index=1}

Strong winds, heavy rain and lightning struck abruptly, overturning the vessel just after midday as rescuers pulled 11 survivors from the water and recovered numerous bodies amid a large-scale search and recovery operation.

The overturned boat was later brought ashore as families and authorities awaited clarity on the identities of those lost and launched an inquiry into safety protocols.

:contentReference[oaicite:0]{index=0}

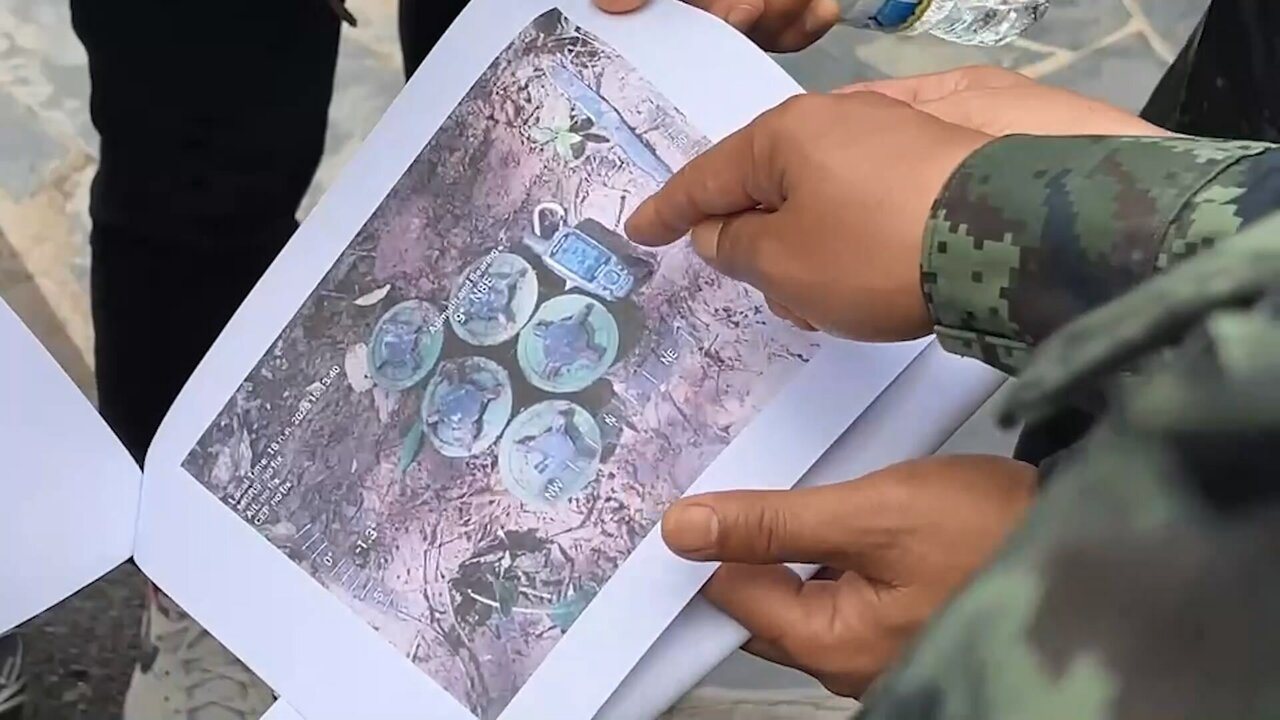

Bangkok’s military confirmed that the mines were of a type not previously used by Thai forces and alleged recent placement, prompting a surge in border tensions.

Cambodia has denied the claims, suggesting the devices may be old remnants, and has urged resolution through diplomatic channels.

The incident marks a sharp escalation in a long‑running territorial dispute between the two nations.

The first container, approximately 17 tonnes, was shipped under a newly signed phytosanitary agreement, symbolizing a strategic effort to diversify exports.

Cambodian officials have urged growers and packaging facilities to maintain clean farming practices to preserve market access and support sustainable trade growth.

The country's low ranking on global press freedom and corruption indexes has driven a small but growing community of Laotians to embrace freedom tech, with local enthusiasts connecting over encrypted apps like Nostr and Signal to share digital asset knowledge.

This grassroots movement is providing a lifeline for information exchange in a nation struggling with economic hardship and political opacity.

The energy crunch has prompted emergency measures, including halting new mining operations, accelerating hydropower projects and exploring solar‑hydro and wind‑hydro hybrids to stabilize supply and reduce dependence on erratic weather patterns.

The visit marks the first by an ASEAN leader during Trump’s second term and includes discussions with Secretary of State Marco Rubio and Defense Secretary Pete Hegseth on economic cooperation and regional security.

Manila is seeking tariff terms more generous than those secured by Vietnam and Indonesia, while also reinforcing its strategic alliance and expanding U.S. military access in response to rising tensions with China in the South China Sea.

The decision, effective from July 22, was made following guidance from the Office of Civil Defense and authorized by President Marcos Jr, with the Department of Interior and Local Government issuing safety measures for affected localities.

The heavy downpours have also brought hazardous conditions to key urban areas, significantly disrupting daily routines for public employees, students and commuters.

The announcement followed photographs showing swelling around his ankles and bruising on his hand, which was reportedly concealed with cosmetic makeup during recent public appearances.

The bruising has been attributed to repeated handshakes and his daily aspirin regimen, which is used for cardiovascular prevention, according to official briefings.

CVI is a chronic but typically benign condition that does not pose an immediate threat to life.

The diagnosis was reached after Mr Trump underwent diagnostic vascular studies, including bilateral lower extremity Doppler ultrasounds and an echocardiogram.

These assessments ruled out more serious vascular conditions, such as deep vein thrombosis, arterial disease, heart failure, renal impairment or systemic illness.

The president’s medical team concluded that he is not currently experiencing discomfort and remains in overall good health.

CVI arises when vein valves fail to facilitate efficient upward blood flow against gravity, leading to blood pooling in the legs.

The condition is more prevalent in adults over 70, and common risk factors include advanced age, obesity, sedentary lifestyle, pregnancy, prolonged standing or sitting, and genetic predisposition.

It affects tens of millions of adults in the United States and has a global footprint.

Symptoms range from mild leg heaviness, swelling and skin discoloration to more severe manifestations such as varicose veins, induration, skin changes and ulceration in advanced stages.

Conservative management typically involves lifestyle modifications such as weight reduction, regular aerobic exercise, elevation of the legs and the use of graduated compression stockings.

These non‑invasive approaches are considered first‑line therapies.

In more advanced or symptomatic cases, minimally invasive interventions—such as sclerotherapy, endovenous thermal ablation (using lasers or radiofrequency), or chemical obliteration—may be employed.

Traditional surgical techniques are now less common.

Medical literature indicates that chronic venous insufficiency is highly prevalent in ageing populations worldwide, with up to half of adults demonstrating some degree of chronic venous disease.

While it is not directly life‑threatening, long‑term complications may include skin ulceration, hemorrhage, dermatitis, and increased risk of thrombotic events.

Emerging evidence also suggests an association between CVI and broader cardiovascular morbidity and overall mortality, independent of age and sex.

Following the public confirmation of the diagnosis, a Republican senator attributed Mr Trump’s condition to stress, though official statements classify CVI as a benign age‑related vascular disorder.

The White House stressed that the evaluation and diagnosis aimed to address public concerns about the president’s visible symptoms.

Mr Trump previously underwent a routine comprehensive physical examination in April 2025, which reported no major health issues prior to the detection of leg swelling.

This incident has prompted discussions about ethics within corporate governance, particularly in the context of the current investor climate that demands high ethical standards from executives.

Professor Asaf Eckstein, a corporate law and securities lecturer at the Hebrew University, commented that it is not surprising given the heightened scrutiny executives face today.

Since the inception of the Me Too movement, numerous similar cases have emerged, including high-profile executives in large, publicly traded companies who have faced repercussions for inappropriate relationships with employees.

Astronomer, a private company that recently raised approximately $74 million, is planning to secure further investments and potentially go public.

The reputation of the company is crucial as it seeks to transition to a publicly traded entity.

Eckstein emphasized the significance of company image, stating that even private companies must be mindful of their public image due to their obligations to a diverse range of stakeholders, including employees and suppliers.

He noted that the conduct of senior executives, particularly when it contradicts the company’s core values, is increasingly untenable.

Legal expert Shira Laht detailed the implications of the situation under Israeli law, where relationships between employees with a power dynamic can raise automatic concerns of sexual harassment.

According to Laht, both Byron and Cabot hold significant positions and shouldn't fall short of expectations regarding their leadership roles within the company.

The law places the burden of proof on the senior employee, which in this case, is Byron.

In the United States, the ramifications of such situations could be even more severe, with potential legal exposure amounting to millions of dollars.

Investors often inquire about any known sexual harassment issues before investing, understanding the potential for significant legal liabilities.

If it is found that Byron failed to disclose such a relationship to investors during fundraising efforts, it could represent a breach of trust and potentially expose the company to legal action.

When raising capital, companies typically make representations and warranties regarding undisclosed liabilities.

If it is later revealed that the company misrepresented its knowledge of potential legal claims, it could face lawsuits for fraudulent misrepresentation.

The fallout from the video footage capturing Byron and Cabot could exacerbate these legal challenges, especially if Laht's claims about harassment are substantiated.

Eckstein advises that companies should adopt a clear and enforceable code of ethics, with diligent oversight from the board of directors regarding compliance with laws governing executive conduct and employee relations.

He stressed that the behavior of senior executives, even in their personal lives, can have profound and immediate implications for the company, including its market stability and relationships with investors.

This incident serves as a reminder of the significant scrutiny placed upon corporate leaders and the potential consequences of their actions in the modern business environment.

Authorities confirmed that 568 passengers were rescued after the blaze erupted at sea, with dramatic accounts describing how many jumped overboard to escape the rapidly spreading flames.

Investigations into the cause of the fire are now underway as the nation grapples with another tragic maritime incident.

While officials tout the move as a strategic diversification of funding sources, analysts note that shifting global interest rates and currency risks may complicate uptake and pricing in future auctions.

Authorities say the initiative aligns with efforts to diversify the economy and promote Thailand as a hub for location-independent professionals seeking long-term stays and modern infrastructure.

The government now plans to reclassify cannabis as a controlled substance and impose tighter regulations, marking a significant departure from its previous stance as the first Asian nation to decriminalize the drug.

Experts warn the digital conflict could undermine regional stability and call for a coordinated diplomatic response to address the growing threat of cyber warfare in Southeast Asia.

Thai officials described the act as a blatant violation of international norms and called for an immediate investigation.

Cambodia has rejected the claims and countered with accusations of Thai border patrol breaches, intensifying the diplomatic standoff.

Emergency services are on high alert as the storm is expected to continue impacting the region, with heavy downpours forecast in the coming days.

The collaboration aims to revitalize coastal economies through shared infrastructure, environmental standards, and strategic marketing, positioning Southeast Asia as a premier global destination for maritime travel.

The initiative supports Thailand’s goal of building a skilled workforce and has been credited with transforming the lives of thousands of young people.

The Tourism Authority credits improved connectivity, diverse offerings, and expanded visa policies for driving the upswing in international arrivals.

The effort is part of a broader environmental campaign to counter reef degradation and support biodiversity, with scientists hopeful the approach will help rebalance delicate underwater habitats.

Officials cited urgent capacity needs driven by industrial expansion and climate-related heat waves, sparking renewed debate over the environmental trade-offs of relying on fossil fuels.

The government has stated that diplomatic channels will be pursued to verify the information, as the search for Low intensifies more than a decade after the scandal first emerged.

Authorities have urged the public to remain vigilant and report suspicious activity, as law enforcement ramps up patrols and security operations to restore public confidence amid growing unease.

The appointment aims to facilitate new trade, investment, and education initiatives, underscoring Malaysia’s commitment to diversifying its international partnerships in an increasingly complex global landscape.

The move follows mounting domestic pressure to address pollution and enforce sovereignty over waste management policies, signaling a broader shift toward stricter environmental controls in Southeast Asia.

Meteorologists warn that the storm could bring widespread flooding, landslides, and infrastructure damage.

Provincial governments have activated emergency response plans and are urging citizens to remain indoors and secure property ahead of the storm’s arrival.

The boat capsized amid a powerful storm that struck the region, killing at least 37 people and leaving several others missing.

Rescue teams described the boy’s survival as miraculous, crediting his quick thinking and a fortunate position on the vessel for saving his life.